Power Your Applications with NVIDIA V100 GPUs

The World’s Most Powerful GPU Server

At Webyne Data Center, we bring you the unmatched performance of NVIDIA Tesla V100 GPUs, designed specifically for AI, machine learning, deep learning, big data, and high-performance computing (HPC). With Cloud V100 GPU instances, you can process massive datasets, train complex AI models, and run advanced simulations faster than ever.

Affordable GPU Power: Upgrade Your Cloud Today

Get More Done: Unleash GPU Performance at a Great Price

V100-Dev

- 1×V100

- 8 vCPU

- 64 GB RAM

- 100GB NVMe SSD

- 1 GBPS Network

- AI Dev, Fine-tuning, Research

- SLA 99.95%

- 24 x 7 Support

V100-Pro

- 2×V100

- 16 vCPU

- 128 GB RAM

- 200GB NVMe SSD

- 1 - 10 GBPS Network

- Stable Diffusion XL, Llama-13B

- SLA 99.95%

- 24 x 7 Support

V100-4XL

- 4×V100

- 32 vCPU

- 260 GB RAM

- 400GB NVMe SSD

- 10 GBPS Network

- Mid LLM (13B–30B), Research

- SLA 99.95%

- 24 x 7 Support

V100-8Pod

- 8×V100 (NVLink)

- 48+ vCPU

- 512 GB RAM

- 800GB NVMe SSD

- 25 -100 GBPS Network

- Large LLM Training (70B), RLHF

- SLA 99.95%

- 24 x 7 Support

V100 Full Box (Dedicated)

- 8×V100 Node

- 48+ vCPU

- 512 GB RAM

- 800GB NVMe SSD

- 25 -100 GBPS Network

- Dedicated GPU Server

- SLA 99.95%

- 24 x 7 Support

Why Choose Webyne GPU Server Services india?

Webyne GPU Cloud Services offer unmatched performance, scalability, and flexibility for businesses across industries. Our infrastructure accelerates AI, machine learning, and HPC workloads, empowering you to achieve faster results while reducing costs and enhancing productivity with ease.

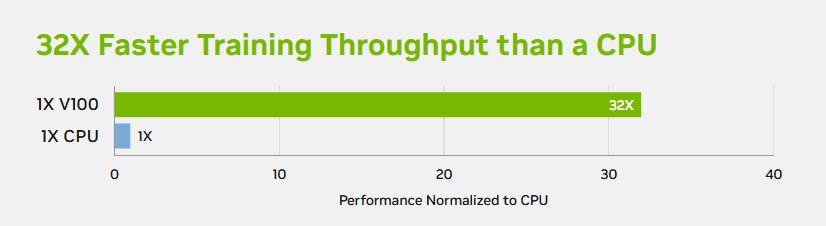

AI Training

With 640 Tensor Cores, V100 is the world’s first GPU to break the 100 teraflops (TFLOPS) barrier of deep learning performance. The next generation of NVIDIA NVLink™ connects multiple V100 GPUs at up to 300 GB/s to create the world’s most powerful computing servers. AI models that would consume weeks of computing resources on previous systems can now be trained in a few days. With this dramatic reduction in training time, a whole new world of problems will now be solvable with AI.

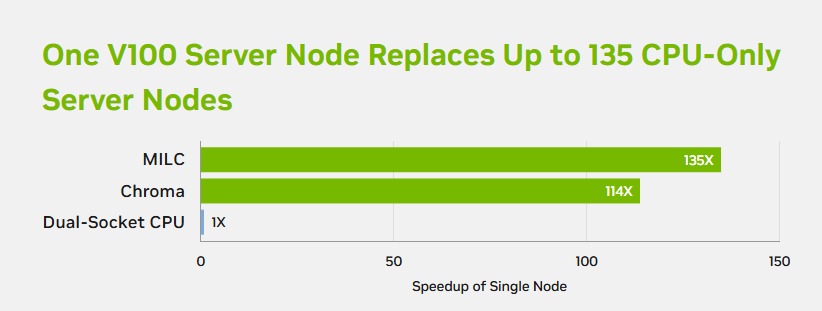

High Performance Computing (HPC)

V100 is engineered for the convergence of AI and HPC. It offers a platform for HPC systems to excel at both computational science for scientific simulation and data science for finding insights in data. By pairing NVIDIA CUDA® cores and Tensor Cores within a unified architecture, a single server with V100 GPUs can replace hundreds of commodity CPU-only servers for both traditional HPC and AI workloads. Every researcher and engineer can now afford an AI supercomputer to tackle their most challenging work.

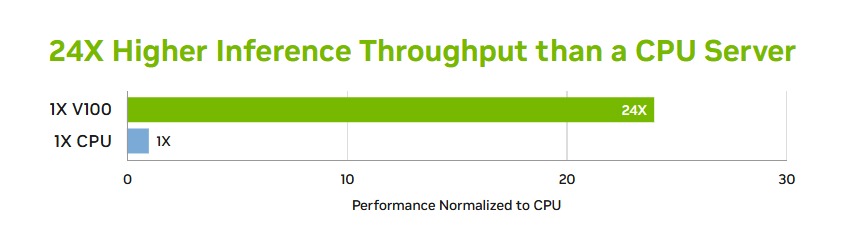

AI Inference

The NVIDIA V100 is engineered to deliver maximum performance in existing hyperscale server racks, making it a key component in modern AI infrastructure. Built on the Volta architecture, the V100 GPU is optimized for deep learning, high-performance computing (HPC), and data analytics. With AI at its core, the V100 delivers up to 24X higher inference performance compared to traditional CPU-based servers. This massive leap in throughput and computational efficiency enables data centers to accelerate AI workloads dramatically.

Key Features of Webyne GPU Cloud Platform

On-Demand Scalability

Webyne’s GPU cloud platform is built for scalability. Whether you’re a startup needing limited resources or an enterprise requiring hundreds of GPUs, our platform scales with your needs. With flexible pay-as-you-go pricing models, you only pay for the GPU resources you use, ensuring cost efficiency.

Enterprise-Grade Security

Hybrid Cloud Solutions

One of the key advantages of partnering with Webyne is our support for hybrid cloud architectures. We understand that some workloads require on-premise infrastructure, while others are better suited for the cloud. Our GPU servers seamlessly integrate with both cloud and on-premise environments, offering flexibility and control.

Operating Systems & Apps

Trending Solutions for Modern Workloads

Accelerating AI Training

AI Inference at Scale

HPC Meets AI

HPC and AI are converging to create new opportunities in industries such as healthcare, energy, and automotive. By combining the power of GPU server with AI capabilities, Webyne delivers unprecedented performance for large-scale scientific research and data analysis.Our cloud platform is optimized for workloads that require both massive computational power and AI capabilities

Get Started with Webyne GPU Cloud Today

Frequently Asked Questions about Graphics Processing Unit

NVIDIA has combined 40 GB of HBM2e memory with the A100 SXM4, utilizing a 5120-bit memory interface. The GPU operates at a base frequency of 1095 MHz, with a boost capability reaching up to 1410 MHz, while the memory runs at 1215 MHz.

The NVIDIA A100 is a data center-grade GPU, part of a larger NVIDIA solution that allows organizations to build large scale ML infrastructure. It is a dual slot 10.5-inch PCI Express Gen4 card, based on the Ampere GA100 GPU.

The A100 80GB features the fastest memory bandwidth in the world, exceeding 2 terabytes per second (TB/s), making it capable of handling the largest models and datasets.

Equipped with up to 80 gigabytes (GB) of high-bandwidth memory (HBM2e), the A100 achieves the world's first GPU memory bandwidth exceeding 2TB/sec, alongside an impressive 95% efficiency in Dynamic Random Access Memory (DRAM) utilization. It also offers 1.7 times the memory bandwidth of its predecessor.

80 GB

Absolutely! With our Managed GPU Hosting, we handle everything from server maintenance to performance optimization, allowing you to focus on developing your applications.

GPU Cloud Hosting allows you to spin up GPU-accelerated virtual machines in the cloud, making it easier to scale resources dynamically. GPU VPS Hosting, on the other hand, gives you dedicated resources on a virtual private server, offering more control over the hardware.

Webyne Virtual Machine Pricing: Detailed Estimates

Unlock Predictable Pricing with Our All-in-One Packages and Start Saving Today! Discover the Best Cost-Effective Cloud Hosting Options Compared to AWS, GCP, and Azure.

Utho

Utho-

$18.00

Includes bandwidth

AWS

AWS -

$155.00

Includes bandwidth

GCP

GCP -

$156.00

Includes bandwidth

Azure

Azure -

$157.00

Includes bandwidth

Utho

Utho-

$36.00

Includes bandwidth

AWS

AWS -

$174.00

Includes bandwidth

GCP

GCP -

$242.00

Includes bandwidth

Azure

Azure -

$232.00

Includes bandwidth

Utho

Utho-

$91.00

Includes bandwidth

AWS

AWS -

$310.00

Includes bandwidth

GCP

GCP -

$445.00

Includes bandwidth

Azure

Azure -

$417.00

Includes bandwidth